Simple Unicode-aware class in C++

A quick tour on different Unicode encode forms with an easy to extend C++ class example

If you just want the full source code click here.

Unicode basics

Unicode associates each possible character (letter, number, punctuation, emoji, etc…) with a unique number known as a codepoint.

Characters are the abstract representations of the smallest components of written language that have semantic value.

The set of all codepoints is known as codespace and Unicode states that there are 1114112

or 0x10FFFF codepoints.

Notationally, a codepoint is denoted by U+n, where n is four to six hexadecimal digits, omitting leading zeros,

unless the code point would have fewer than four hexadecimal digits—for example, U+0001, U+0012, U+0123,

U+1234, U+12345, U+102345.

The codespace is divided in 17 sections or ranges, known as planes, each plane has 65536 characters.

The first plane (Plane 0) is known as the BMP or Basic Multilingual Plane,

this plane contains English letters, and matches ASCII from 0 to 127.

The last four hexadecimal digits in each codepoint indicate a character’s position inside a plane.

The remaining digits indicate the plane. For example, U+23456 is found at location 0x3456 in Plane 2.

for more details see Unicode 16.0.0 / 2.9 Details of Allocation.

In this post we’re interested in encoding Unicode codepoints, which for our purposes it will mean how to represent a codepoint in binary for actual usage in computer systems. Let's start with a couple of observations.

Bits required

We can represent any codepoint in the codespace using 21 bits

This would leave us with 965040 unused numbers.

We also can encode the entire codespace using any number of bits greater than 21,

but we end up with a lot more unused numbers (which sometimes is preferable,

see UTF-32 encoding below).

Alternatively, we can use 16 bits + 20 bits

to precisely represent the Unicode codespace

(See UTF-16 encoding below).

Fixed vs variable width encoding

When representing a codepoint in memory we need to define how many bits will be used per character, fixed-width means that all codepoints will be encoded using the same number of bits, variable-width means that different characters can be encoded with different numbers of bits.

A specific encoding system like UTF8, UTF16 or UTF32 defines the number of bits to be used

for a given codepoint through a code unit, which is the minimal storable information in the system.

We say for example:

The code point U+1F92F: 🤯

- Requires 4 code units to be encoded in UTF-8 (where code unit is 8 bits)

- Requires 2 code units to be encoded in UTF-16 (where code unit is 16 bits)

- Requires 1 code unit to be encoded in UTF-32 (where code unit is 32 bits)

The code point U+0041: A

- Requires 1 code unit to be encoded in UTF-8 (where code unit is 8 bits)

- Requires 1 code unit to be encoded in UTF-16 (where code unit is 16 bits)

- Requires 1 code unit to be encoded in UTF-32 (where code unit is 32 bits)

UTF 32

It is a fixed-width encoding, each codepoint is directly mapped to a 32 bit code unit

uint32_t LetterA = 0x0041;

Can be useful when working on unicode text whose characters need to be indexed easily or require other per-codepoint processing:

uint32_t RandomUserEmoji = UserInputEmojis[4];

See Unicode 16.0.0 / 3.9.1 UTF-32

UTF 16

It is a variable-width encoding, a codepoint is represented using one code unit (16 bits)

if the character to be encoded is in the BMP plane or two code units otherwise.

Codepoints outside of the BMP are encoded using a surrogate pair,

which is a pair of two code units whose value is in the range [0xD800, DFFF],

this value is associated uniquely with a Unicode scalar value in the range [U+10000,U+10FFFF].

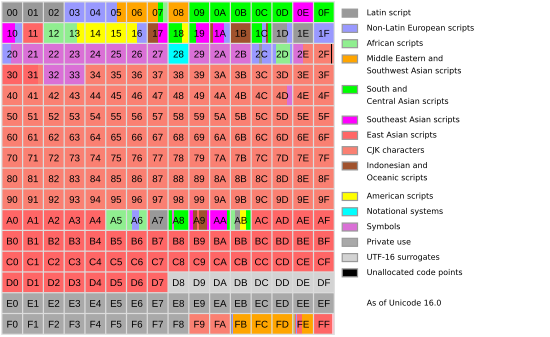

Figure from Wikipedia Unicode Plane article

The 6 highest bits of a code unit in a surrogate pair, denote which element of the pair the code unit is, observe the following bit pattern for an illustration:

// Possible numeric ranges of first element of the surrogate pair

// First 6 digits are 0b1101’10

From 0xD800 to 0xD8FF = From 0b1101’1000’0000’0000 to 0b1101’1000’1111’1111

From 0xD900 to 0xD9FF = From 0b1101’1001’0000’0000 to 0b1101’1001’1111’1111

From 0xDA00 to 0xDAFF = From 0b1101’1010’0000’0000 to 0b1101’1010’1111’1111

From 0xDB00 to 0xDBFF = From 0b1101’1011’0000’0000 to 0b1101’1011’1111’1111

// Possible numeric ranges of second element in the surrogate pair

// First 6 digits are 0b1101’11

From 0xDC00 to 0xDCFF = From 0b1101’1100’0000’0000 to 0b1101’1100’1111’1111

From 0xDD00 to 0xDDFF = From 0b1101’1101’0000’0000 to 0b1101’1101’1111’1111

From 0xDE00 to 0xDEFF = From 0b1101’1110’0000’0000 to 0b1101’1110’1111’1111

From 0xDF00 to 0xDFFF = From 0b1101’1111’0000’0000 to 0b1101’1111’1111’1111

From the above bit pattern we can also note that there are 10 free bits (per pair element)

that can be used to encode stuff, summing a total of 20 free bits

(Which can represent 1048576 distinct values).

See Unicode 16.0.0 / 3.9.2 UTF-16

UTF 8

It is a variable-width encoding, the high bits of each code unit indicate the part of the code unit sequence to which each byte belongs, for example.

0b0xxx’xxxx

Code units that represent an ASCII character, which is a number in the range [0, 127]

0b10xx’xxxx

For code units that are part of a code unit sequence but are not the leading (first) byte of the sequence

0b110x’xxxx

For code units that are the first byte in a code unit sequence of 2 bytes

0b1110’xxxx

For code units that are the first byte in a code unit sequence of 3 bytes

0b1111’0xxx

For code units that are the first byte in a code unit sequence of 4 bytes

See Unicode 16.0.0 / 3.9.3 UTF-8

Code

The following code aligns to the UTF-8 everywhere manifesto, that suggests using UTF-8 internally in your program and doing conversions when communicating with other APIs that receive other types of encodings.

Let's start detecting the size of an UTF-8 code unit sequence, note that there are better ways to implement this but here we're aiming for a simple and easy to understand approach.

enum class EUtf8SequenceSize : uint8_t

{

One, Two, Three, Four, Invalid

};

[[nodiscard]] EUtf8SequenceSize SizeOfUtf8Sequence(const unsigned char& Utf8Char)

{

// https://unicode.org/mail-arch/unicode-ml/y2003-m02/att-0467/01-The_Algorithm_to_Valide_an_UTF-8_String

if (Utf8Char <= 0x7f) // 0b0111'1111

{

// ASCII

return EUtf8SequenceSize::One;

}

else if (Utf8Char <= 0xbf) // 0b1011'1111

{

// Not a leading UTF8 byte, possibly something went wrong reading previous sequences

return EUtf8SequenceSize::Invalid;

}

else if (Utf8Char <= 0xdf) // 0b1101'1111

{

// Leading byte in a two byte sequence

return EUtf8SequenceSize::Two;

}

else if (Utf8Char <= 0xef) // 0b1110'1111

{

// Leading byte in a three byte sequence

return EUtf8SequenceSize::Three;

}

else if (Utf8Char <= 0xf7) // 0b1111'0111

{

// Leading byte in a four byte sequence

return EUtf8SequenceSize::Four;

}

// Unicode 3.1 ruled out the five and six octets UTF-8 sequence as illegal although

// previous standard / specification such as Unicode 3.0 and RFC 2279 allow the

// five and six octets UTF-8 sequence. Therefore, we need to make sure those value are not in the UTF-8

return EUtf8SequenceSize::Invalid;

}

Now a function to convert an UTF8 sequence into a unicode code point (encoded in UTF32).

[[nodiscard]] char32_t NextCodepointFromUtf8Sequence(const unsigned char*& Utf8Sequence)

{

if (*Utf8Sequence == 0)

{

return 0;

}

EUtf8SequenceSize NumOfBytes = SizeOfUtf8Sequence(*Utf8Sequence);

if (NumOfBytes == EUtf8SequenceSize::Invalid)

{

return 0; // End processing

}

unsigned char FirstByte = *Utf8Sequence;

if (NumOfBytes == EUtf8SequenceSize::One)

{

++Utf8Sequence; // Point to the start of the next UTF8 sequence

return FirstByte;

}

unsigned char SecondByte = *(++Utf8Sequence);

if (SecondByte == 0)

{

return 0;

}

if (NumOfBytes == EUtf8SequenceSize::Two)

{

++Utf8Sequence; // Point to the start of the next UTF8 sequence

return

((FirstByte & 0b0001'1111) << 6) |

(SecondByte & 0b0011'1111);

}

unsigned char ThirdByte = *(++Utf8Sequence);

if (ThirdByte == 0)

{

return 0;

}

if (NumOfBytes == EUtf8SequenceSize::Three)

{

++Utf8Sequence; // Point to the start of the next UTF8 sequence

return

((FirstByte & 0b0000'1111) << 12) |

((SecondByte & 0b0011'1111) << 6) |

(ThirdByte & 0b0011'1111);

}

unsigned char FourthByte = *(++Utf8Sequence);

if (FourthByte == 0)

{

return 0;

}

++Utf8Sequence; // Point to the start of the next UTF8 sequence

return

((FirstByte & 0b0000'0111) << 18) |

((SecondByte & 0b0011'1111) << 12) |

((ThirdByte & 0b0011'1111) << 6) |

(FourthByte & 0b0011'1111);

}

The above functions can be easily used to create utility methods for our Utf8String class,

below is a function that calculates how many code points does the UTF8 string has.

int32_t Utf8String::CodePointsLen() const

{

if (Len() == 0)

{

return 0;

}

int32_t TotalCodePoints = 0;

const unsigned char* Utf8Str = GetRawData();

while (NextCodepointFromUtf8Sequence(Utf8Str))

{

++TotalCodePoints;

}

return TotalCodePoints;

}

A function that converts from UTF8 to UTF32

std::u32string Utf8String::ToUtf32() const

{

std::u32string Utf32Output;

if (Len() == 0)

{

return Utf32Output;

}

const unsigned char* Utf8Str = GetRawData();

while (*Utf8Str != 0)

{

char32_t UnicodeCodePoint = NextCodepointFromUtf8Sequence(Utf8Str);

Utf32Output.push_back(UnicodeCodePoint);

}

return Utf32Output;

}

A function that converts from UTF8 to UTF16

std::u16string Utf8String::ToUtf16() const

{

std::u16string Utf16Output;

if (Len() == 0)

{

return Utf16Output;

}

const unsigned char* Utf8Str = GetRawData();

while (*Utf8Str != 0)

{

char32_t UnicodeCodePoint = NextCodepointFromUtf8Sequence(Utf8Str);

if (UnicodeCodePoint < 0x1'0000) // 0b0001'0000'0000'0000'0000

{

Utf16Output.push_back(UnicodeCodePoint);

}

else

{

UnicodeCodePoint -= 0x1'0000;

char16_t HighSurrogate = 0xd800 + ((UnicodeCodePoint >> 10) & 0x3FF); // 0x3FF == 0b0011'1111'1111

char16_t LowSurrogate = 0xdc00 + (UnicodeCodePoint & 0x3FF);

Utf16Output.push_back(HighSurrogate);

Utf16Output.push_back(LowSurrogate);

}

}

return Utf16Output;

}

Understanding the wchar mess

Size of wchar_t can be different depending on the platform, on Windows it’s 2 bytes (16 bits) and on

most Unix systems it’s 4 bytes (32 bits), why?

In the beginning 7 bits were used to encode ASCII characters (numbers from 0 to 127), then multiple working groups tried to define rules for the usage of one extra bit (8 bits in total) to represent characters outside of the ASCII range.

ISO produced ISO-8859-1, ISO-8859-2, and 13 more standards, Microsoft created Windows-1252

a.k.a CP-1252

(and a bunch of other code pages) and referred to them as "ANSI code pages", which is technically

incorrect because they never became ANSI standards, in words of Cathy Wissink:

The term "ANSI" as used to signify Windows code pages is a historical reference, but is nowadays a misnomer that continues to persist in the Windows community. The source of this comes from the fact that the Windows code page 1252 was originally based on an ANSI draft, which became ISO Standard 8859-1.

However, in adding code points to the range reserved for control codes in the ISO standard, the Windows code page 1252 and subsequent Windows code pages originally based on the ISO 8859-x series deviated from ISO. To this day, it is not uncommon to have the development community, both within and outside of Microsoft, confuse the 8859-1 code page with Windows 1252, as well as see “ANSI” or “A” used to signify Windows code page support."

Then people noted that if they wanted to encode all possible characters in a unified way,

more than 8 bits were required, how many?

16 bits according to ISO (first draft of ISO/IEC-10646) and people from Xerox and Apple (Unicode 1.0).

In 1990 two initiatives existed for a unified character set,

ISO/IEC-10646 and Unicode, ISO defined an encoding form known as UCS-2

which was a fixed width encoding of 16 bits, in 1991 ISO and Unicode agreed to

synchronize their codepoint assignments and UCS-2 became the standard way to encode Unicode codepoints.

Then the cool guys at Microsoft decided to support Unicode, in the process they invented the data

type wchar with a size of 2 bytes, the “w” stands for wide to outline the

fact that this is a character wider than 1 byte.

Time passed and the smart guys at ISO and Unicode noted again that they needed more than 16 bits

to encode all characters of the world, the solution was the introduction of the surrogate

pairs of UTF-16 stated in Unicode 2.0 in 1996, Also UTF-32 (UCS-4) emerged and Unix systems

decided to make their wchar to be a size of 4 bytes instead of 2 for easier unicode support.

Links

- Unicode 16.0.0 / 2.2.3 Characters, No Glyphs

- Unicode 16.0.0 / 2.2.4 Code Points and Characters

- Unicode 16.0.0 / 2.9 Details of Allocation

- Unicode 16.0.0 / 3.9.1 UTF-32

- Unicode 16.0.0 / 3.9.2 UTF-16

- Unicode 16.0.0 / 3.9.3 UTF-8

- Unicode 16.0.0 / A.1.1 Code Points

- UTF-8 everywhere manifesto

- Unicode WinXP, Cathy Wissink

- Wide Character from Wikipedia

- Differences between ANSI, ISO-8859-1 and MacRoman character sets

- Basic Multilingual Plane by Wikipedia

Credits

Written by Romualdo Villalobos